Can We Get Column Names Agains the Column Values in Datastage

Earlier you offset any data project, you need to take a footstep back and wait at the dataset before doing anything with it. Exploratory Data Analysis (EDA) is just as important equally any part of data analysis considering real datasets are really messy, and lots of things tin can go wrong if you don't know your data. The Pandas library is equipped with several handy functions for this very purpose, and value_counts is one of them. Pandas value_counts returns an object containing counts of unique values in a pandas dataframe in sorted order. Withal, near users tend to overlook that this function can exist used not only with the default parameters. And then in this article, I'll show yous how to go more than value from the Pandas value_counts by altering the default parameters and a few additional tricks that will save you time.

What is value_counts() function?

The value_counts() office is used to get a Series containing counts of unique values. The resulting object will be in descending order so that the commencement element is the virtually frequently-occurring element. Excludes NA values by default.

Syntax

df['your_column'].value_counts() - this will return the count of unique occurences in the specified column.

It is important to note that value_counts just works on pandas series, not Pandas dataframes. Every bit a event, we only include one subclass df['your_column'] and non two brackets df[['your_column']].

Parameters

- normalize (bool, default False) - If Truthful then the object returned will contain the relative frequencies of the unique values.

- sort (bool, default True) - Sort by frequencies.

- ascending (bool, default Simulated) - Sort in ascending order.

- bins (int, optional) - Rather than count values, group them into one-half-open bins, a convenience for

pd.cut, only works with numeric information. - dropna (bool, default True) -Don't include counts of NaN.

Loading a dataset for alive demo

Allow's see the basic usage of this method using a dataset. I'll exist using the Coursera Course Dataset from Kaggle for the live demo. I accept also published an accompanying notebook on git, in case you want to get my code.

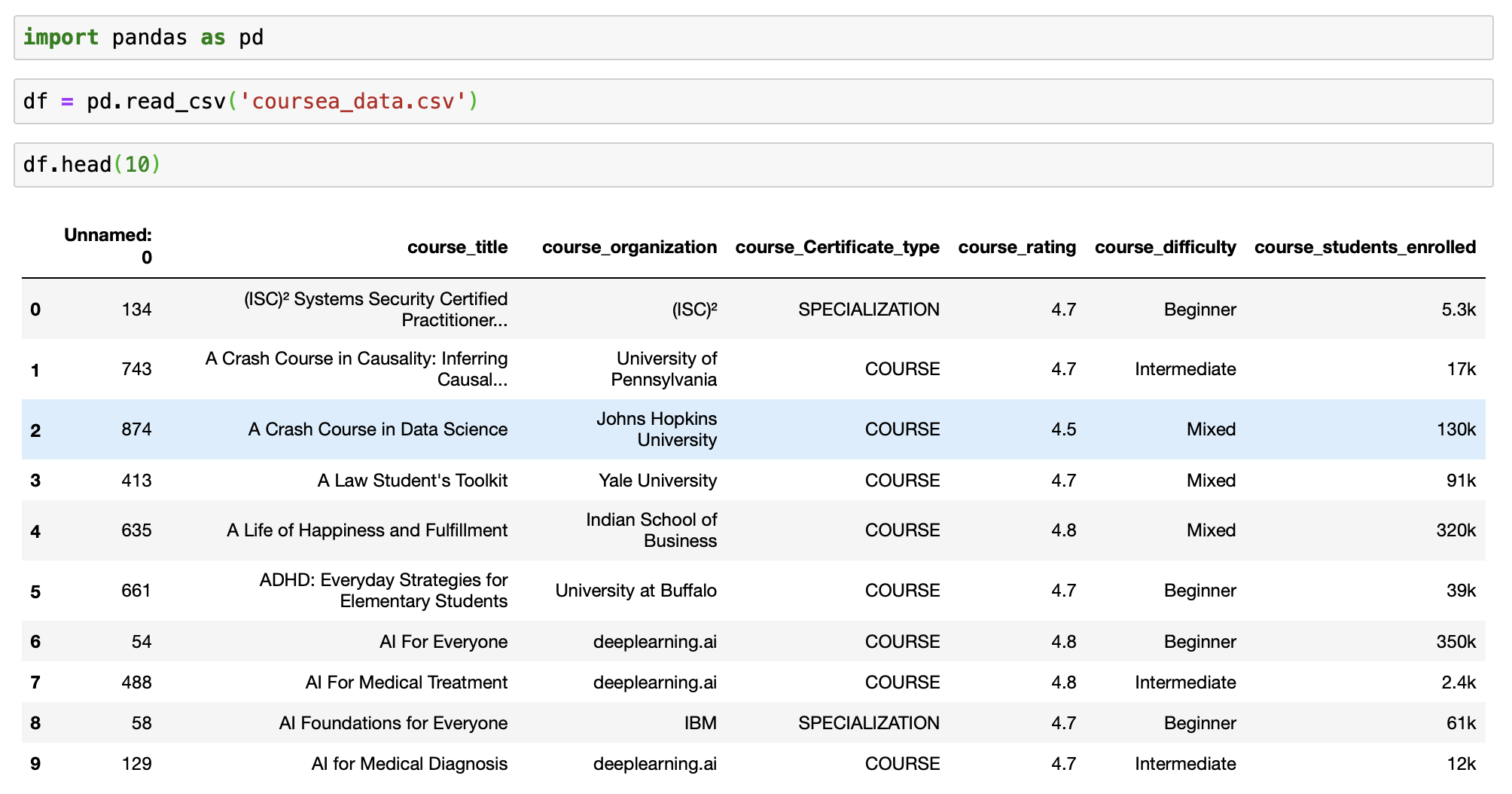

Let'due south start by importing the required libraries and the dataset. This is a fundamental stride in every information assay procedure. And and then review the dataset in Jupyter notebooks.

# import package import pandas every bit pd # Loading the dataset df = pd.read_csv('coursea_data.csv') #quick look about the information of the csv df.head(10)

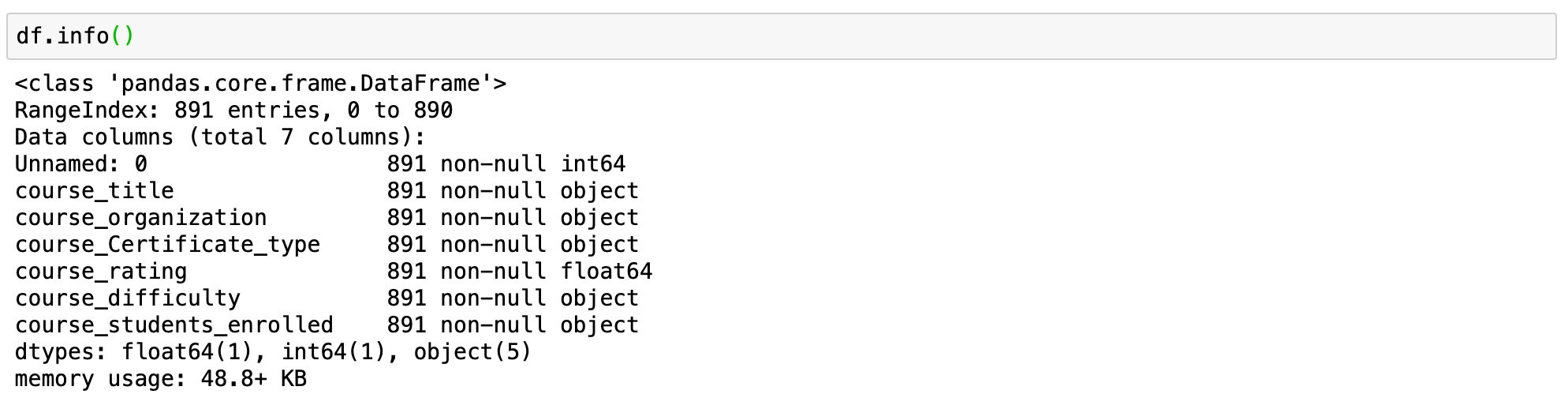

# cheque how many records are in the dataset # and if we have any NA df.info()

This tells us that we accept 891 records in our dataset and that we don't have any NA values.

1. ) value_counts() with default parameters

Now we are ready to utilise value_counts function. Let begin with the basic application of the function.

Syntax - df['your_column'].value_counts()

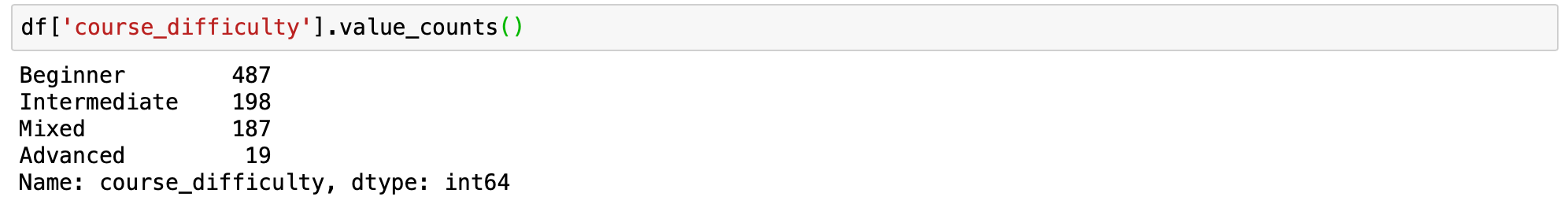

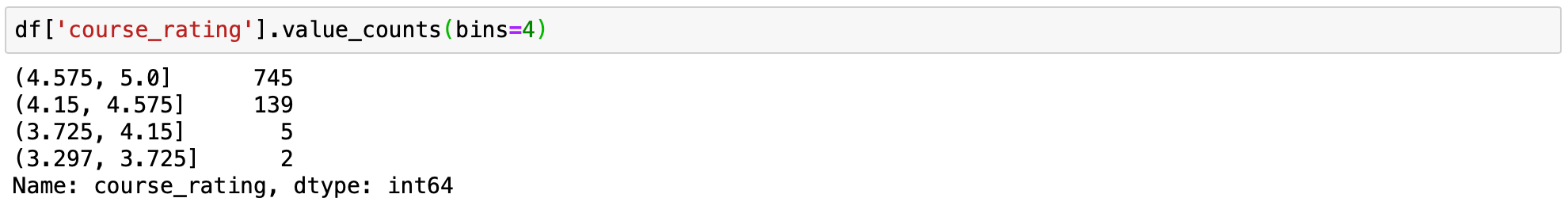

We will become counts for the column course_difficulty from our dataframe.

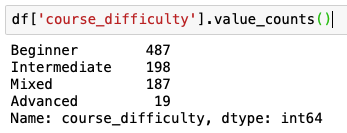

# count of all unique values for the cavalcade course_difficulty df['course_difficulty'].value_counts()

The value_counts function returns the count of all unique values in the given index in descending order without any null values. Nosotros can quickly meet that the maximum courses have Beginner difficulty, followed by Intermediate and Mixed, and then Advanced.

At present that we understand the bones apply of the part, it is time to effigy out what parameters practice.

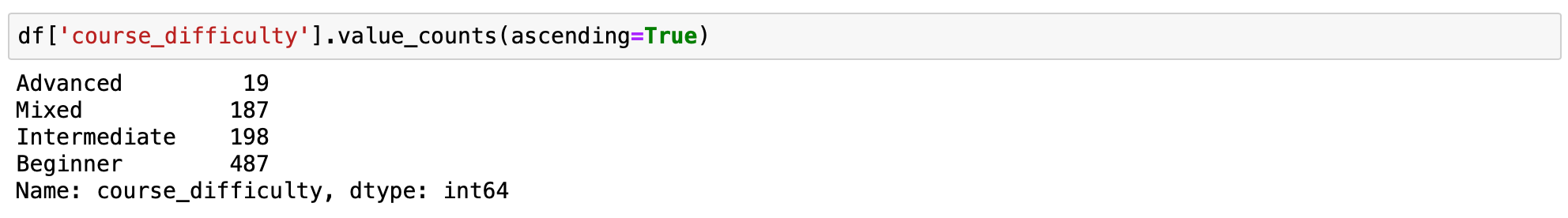

ii.) value_counts() in ascending order

The series returned by value_counts() is in descending social club by default. We can reverse the case past setting the ascending parameter to True.

Syntax - df['your_column'].value_counts(ascending=True)

# count of all unique values for the column course_difficulty # in ascending order df['course_difficulty'].value_counts(ascending=True)

iii.) value_counts() sorted alphabetically

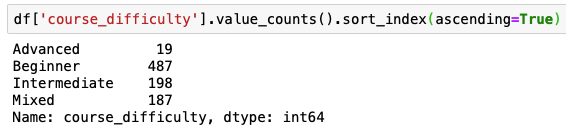

In some cases it is necessary to display your value_counts in an alphabetical guild. This tin can be done easily past adding sort index sort_index(ascending=True) after your value_counts().

Default value_counts() for column "course_difficulty" sorts values past counts:

Value_counts() with sort_index(ascending=True) sorts by index (column that you are running value_counts() on:

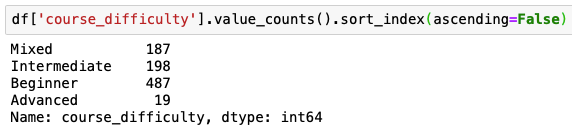

If yous desire to list value_counts() in opposite alphabetical social club y'all will need to change ascending to False sort_index(ascending=False)

4.) Pandas value_counts(): sort by value, and then alphabetically

Lets use for this example a slightly diffrent dataframe.

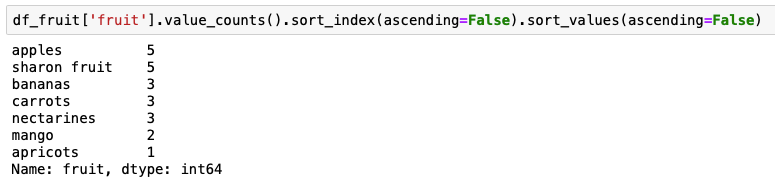

df_fruit = pd.DataFrame({ 'fruit': ['sharon fruit']*five + ['apples']*5 + ['bananas']*iii + ['nectarines']*3 + ['carrots']*3 + ['apricots'] + ['mango']*2 }) Here we want to get output sorted commencement by the value counts, then alphabetically by the name of the fruit. This can be done by combining value_counts() with sort_index(ascending=False) and sort_values(ascending=False).

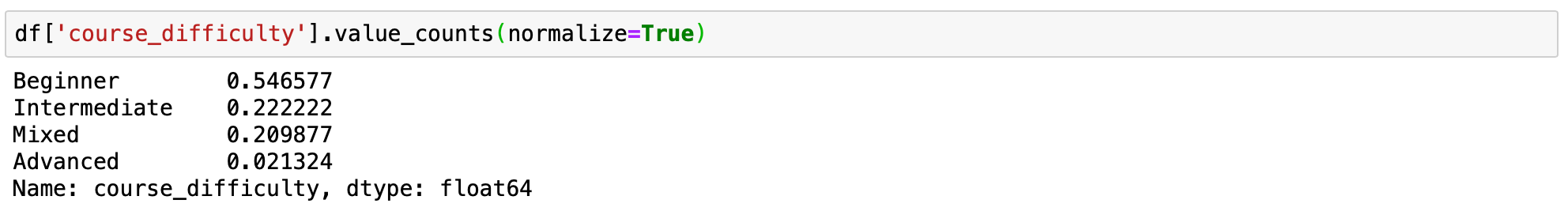

five.) value_counts() persentage counts or relative frequencies of the unique values

Sometimes, getting a percentage count is amend than the normal count. By setting normalize=True, the object returned will comprise the relative frequencies of the unique values. The normalize parameter is set to Faux by default.

Syntax - df['your_column'].value_counts(normalize=Truthful)

# value_counts percentage view df['course_difficulty'].value_counts(normalize=True)

6.) value_counts() to bin continuous information into discrete intervals

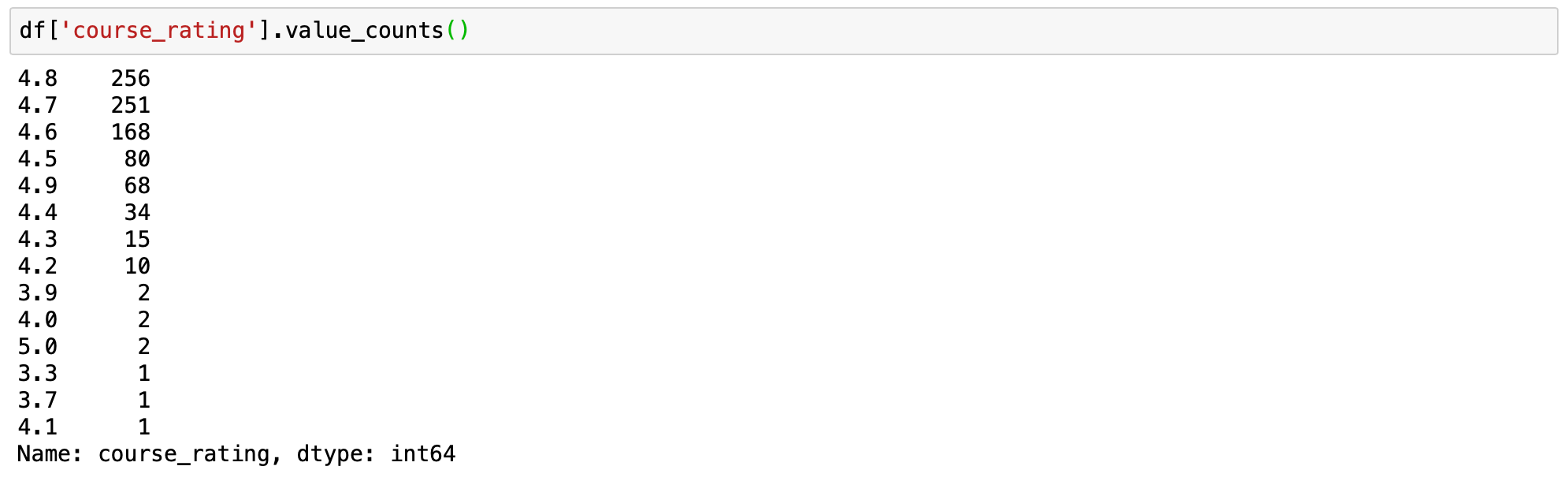

This is one bang-up hack that is commonly under-utilised. The value_counts() can be used to bin continuous data into discrete intervals with the help of the bin parameter. This pick works only with numerical data. It is similar to the pd.cut office. Allow's see how it works using the course_rating column. Permit's group the counts for the column into 4 bins.

Syntax - df['your_column'].value_counts(bin = number of bins)

# applying value_counts with default parameters df['course_rating'].value_counts() # applying value_counts on a numerical column # with the bin parameter df['course_rating'].value_counts(bins=4)

Binning makes it easy to understand the idea being conveyed. We tin hands see that most of the people out of the total population rated courses above 4.5. With just a few outliers where the rating is beneath 4.15 (simply seven rated courses lower than 4.15).

vii.) value_counts() displaying the NaN values

By default, the count of null values is excluded from the result. Only, the same can exist displayed easily by setting the dropna parameter to False. Since our dataset does not have whatsoever null values setting dropna parameter would non make a difference. Merely this tin can be of use on some other dataset that has null values, so keep this in mind.

Syntax - df['your_column'].value_counts(dropna=Simulated)

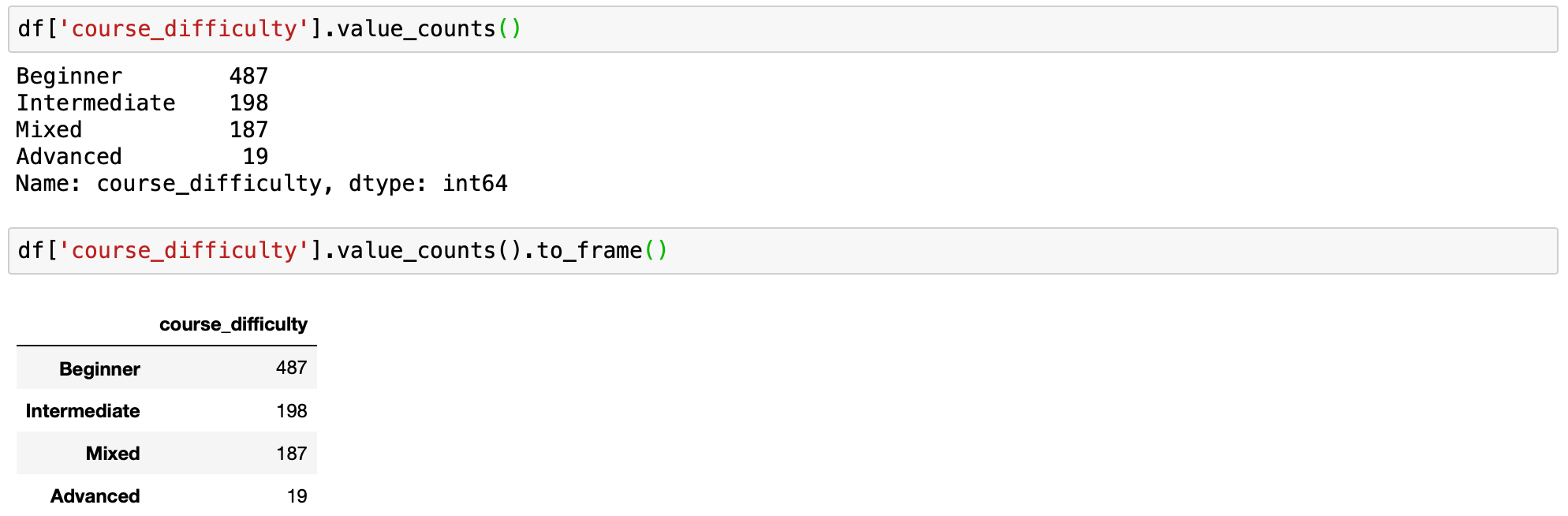

8.) value_counts() equally dataframe

Equally mentioned at the start of the article, value_counts returns series, not a dataframe. If y'all want to have your counts as a dataframe you can do it using role .to_frame() after the .value_counts().

Nosotros tin convert the series to a dataframe as follows:

Syntax - df['your_column'].value_counts().to_frame()

# applying value_counts with default parameters df['course_difficulty'].value_counts() # value_counts as dataframe df['course_difficulty'].value_counts().to_frame()

If you need to proper noun index cavalcade and rename a cavalcade, with counts in the dataframe you can convert to dataframe in a slightly different fashion.

value_counts = df['course_difficulty'].value_counts() # converting to df and assigning new names to the columns df_value_counts = pd.DataFrame(value_counts) df_value_counts = df_value_counts.reset_index() df_value_counts.columns = ['unique_values', 'counts for course_difficulty'] # modify cavalcade names df_value_counts ix.) Group by and value_counts

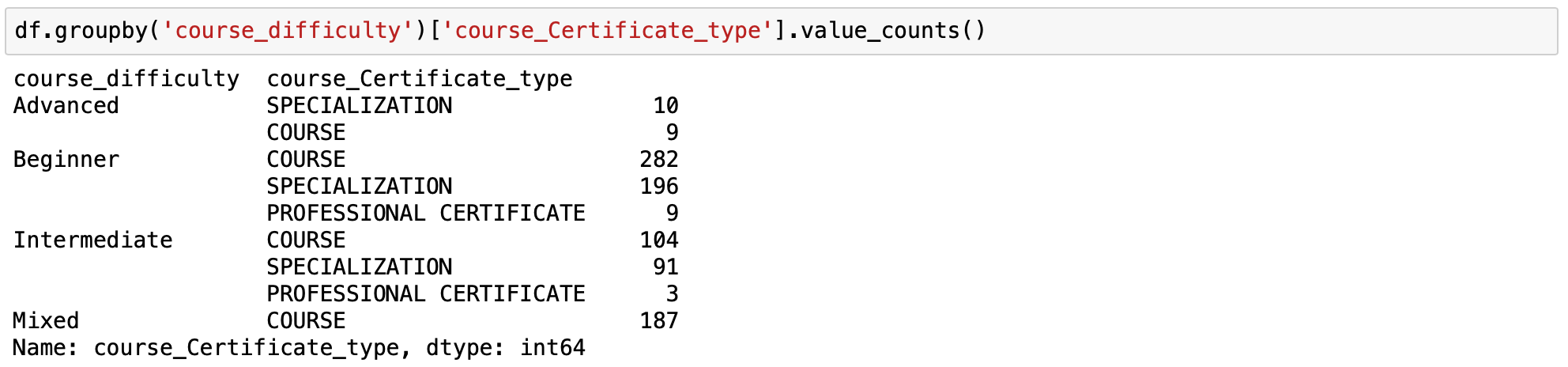

This is one of my favourite uses of the value_counts() office and an underutilized one as well. Groupby is a very powerful pandas method. You can group past ane column and count the values of some other cavalcade per this column value using value_counts.

Syntax - df.groupby('your_column_1')['your_column_2'].value_counts()

Using groupby and value_counts we can count the number of certificate types for each type of class difficulty.

This is a multi-index, a valuable trick in pandas dataframe which allows us to accept a few levels of alphabetize hierarchy in our dataframe. In this case, the course difficulty is the level 0 of the index and the certificate type is on level 1.

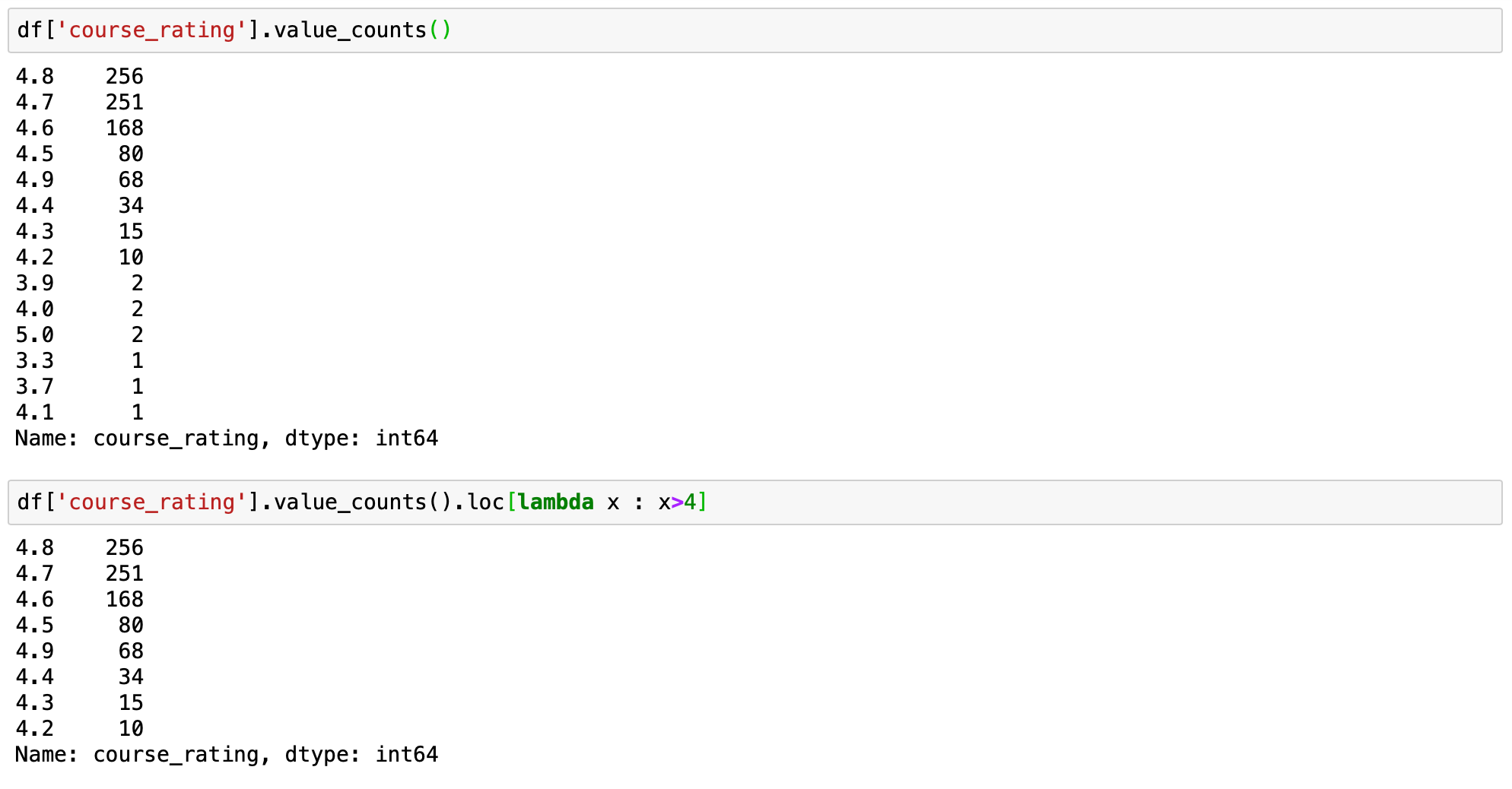

x. Pandas Value Counts With a Constraint

When working with a dataset, you may demand to render the number of occurrences by your index cavalcade using value_counts() that are also limited by a constraint.

Syntax - df['your_column'].value_counts().loc[lambda x : x>1]

The in a higher place quick ane-liner will filter out counts for unique data and see but data where the value in the specified cavalcade is greater than 1.

Allow's demonstrate this by limiting course rating to exist greater than 4.

# prints standart value_counts for the column df['course_rating'].value_counts() # prints filtered value_counts for the column df['course_rating'].value_counts().loc[lambda 10 : x>4]

Hence, we can run into that value counts is a handy tool, and we tin can practise some interesting analysis with this unmarried line of code.

Source: https://re-thought.com/pandas-value_counts/

0 Response to "Can We Get Column Names Agains the Column Values in Datastage"

Postar um comentário